This Black Woman Does Not Exist

Or: What I Learned About Algorithmic Bias From Creating the First AI-generated faces on Wikimedia Commons

Above: The first nine images generated by an Artificial Intelligence to be placed into Wikimedia Commons. Find them here.

As a Wikipedian and an artist interested in AI, I wanted to upload some images created by an artificial intelligence to Wikimedia Commons, the image repository of creative-commons licensed works. It’s often thought of as “the source of Wikipedia’s images” but it’s an entity in its own right. The images, the first of their kind on Commons, spawned an interesting discussion among the site’s volunteers (read it here) and are now used to illustrate Wikipedia articles on Human image synthesis, StyleGAN, and Generative Adversarial Network.

I also have a very weird, nerdy sense of pride for constructing this banner explaining why the images were public domain, which feels straight out of Blade Runner:

However, in trying to create a diverse series of faces, I confronted a “glitch” in the system, and a visual representation of what algorithmic bias looks like.

A Note on Algorithmic Bias

StyleGAN, NVIDIA’s model that was trained on Flickr portraits, was incredibly bad at generating convincing faces for black women. (A note here on what, exactly, the already blurry concept of “race” means when it’s re-created by an algorithm sorting through thousands of faces to think about what a face is supposed to look like: I have to try to remember that AI-generated faces do not have a “race” of any kind. Call me out!).

The black woman who finally came to appear on Commons was the result of generating hundreds of images of women — a process that I didn’t have to do for any of the others. When black skin tones were generated, the women almost always had what might more likely be associated with Asian or South Asian features. When the algorithm generated images with features more likely to be associated with “black” faces, the images weren’t as detailed, and oftentimes distorted.

Below you see the first three “black” women generated in that session by StyleGAN, and one randomly selected “white” woman from the same session for comparison. The first image is less detailed, the second image is highly distorted; the final image (a small variation of the previous) is somewhat convincing, but not up to par with the random “white” woman on the right.

A Hypothesis

If we were dealing with biased datasets, this is exactly what it would look like: with less knowledge about what black women look like, the GAN is forced to “guess” more often, which is why you get half-assed renderings and glitchy distortions. If white women were represented more often, you’d get many more near-perfect facsimiles of white human faces.

Turns out, you do. Here’s how many images I had to create before getting to a single, passable face with black skin tone:

When NVLabs shared the training data for the set, it offered the caveat that “the images were crawled from Flickr, thus inheriting all the biases of that website.” In addition, the images taken from Flickr were specifically shared under a Creative Commons license; then, they were cropped by a machine and filtered once more by humans using Mechanical Turk, a website where you can pay humans very small amounts of money for very small tasks. This means that the data is biased by cultural norms around the sharing of photos — not only who shares and uploads photos on Flickr, but also who is navigating Creative Commons Licensing. There was additional bias in the machine that cropped the images (who did it select and who did it crop? Was it good at recognizing black faces? If it was, it would be an anomaly in face recognition software). Additionally, bias would come from the final filtering process by humans, who were tasked with deciding which images were clear, usable, and human (rather than statues, for example).

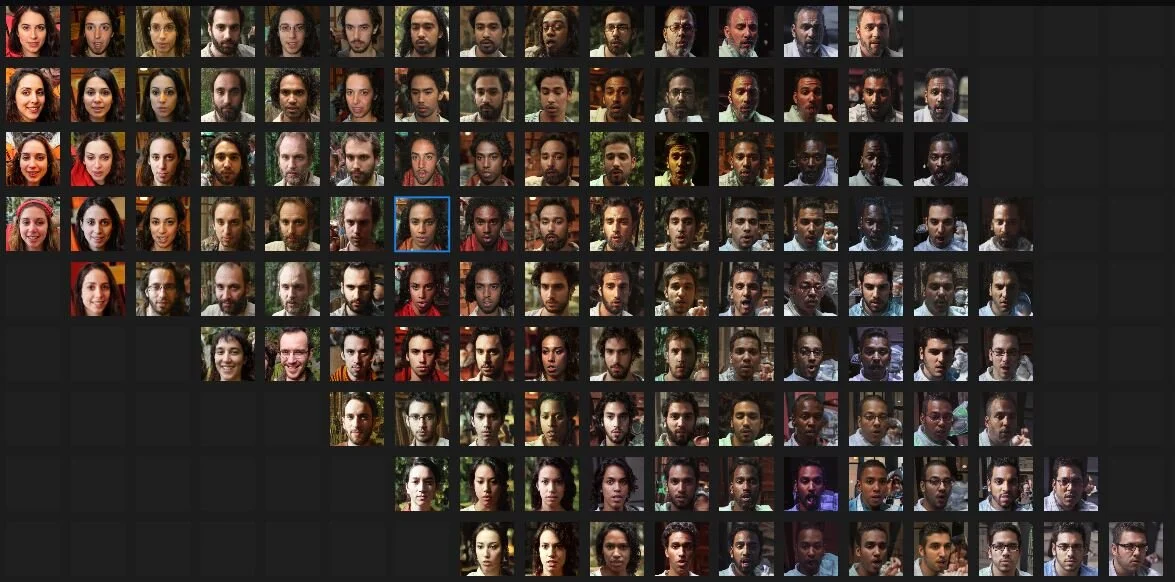

The original 70,000 images used to train the GAN are publicly available (find them here). To test my hypothesis, I picked four folders to determine how many of the training images were pictures of black women. That would be 4,000 samples out of a population of 70,000, which my late-night math suggests is a 2% margin of error at a 99% confidence level, if that’s the kind of thing you’re looking for. Basically: I think 4,000 was a good chunk to look at to generalize about the rest of the data.

The parameters were that any photo with a face in it was counted once, even if there was more than one face. I counted children and the elderly. Also, a caveat: I’m a white man, and so this distinction of racial categories is informed by that. I have no authority to determine anyone’s race or gender from a photograph, and so of course, this process is biased and honestly kind of weird. I’ve included some data to help you run this research on your own, if you find a different outcome, let me know.

Results

“Of the 4,000 images sampled, 102 contained black women, or just about 2.55%. By comparison, there were 1,152 white women, or 28.8%.”

Of the 4,000 images sampled, 102 contained black women, or just about 2.55%. By comparison, and by a much more laborious process, the number of images of white women in a different random sample is 1,152, or about 28.8%.

Additionally I discovered:

1 Henry Kissinger

1 white guy in a sombrero

1 white guy in an Native American headdress

3 John Kerrys

1 image of my friend Megan taken by my friend Astra

It’s also evident that greater numbers of images allows for greater diversity of images, so for example, there were significantly more photographs of white girls and babies. To make matters worse, in the sample of black women, I found two pairs of duplicate women (ie, same women, different photos) further reducing the diversity of data in the set, since duplicates of facial data is less helpful in training a model that uses that data to create new faces: presumably, the machine learns a lot less the second time it reads a face.

Discussion

Thinking about representation in generated images may seem low on our list of priorities for face recognition problems. But if we don’t consider some way of making sure the root of machine learning processes for GANs isn’t racially skewed, the consequences are going to linger. Consider the effects of film that was developed for white skin, for example — which could very likely be a contributing factor to why there aren’t more photographs of black women on Flickr in the first place.

GANs aren’t just going to be used for artsy generative photography. But this is a dataset that could be used in other applications. And if the systemic bias keeps going in ML research, it’s going to end up normalized, and if so, any future tech with Machine Learning at its core will likely end up being less responsive, adaptable, and relevant to black users. We’re off to a rough start.

As a first step, we’d expect a commitment from companies and research using these algorithms to train their data based on ethically sourced images, and aim to establish percentage guidelines of inclusion in datasets for the sake of diversity. I read today that Google contractors reportedly paid black homeless people $5 for the right to take their pictures for the sake of including more racial diversity in their training data. That, too, seems like a rough start.

Bigger than that would be to include more diversity in the groups of people making these decisions in the first place — probably a more pressing topic. But I do worry that we all get one chance to take the right steps before datasets become “the norm” or “the reference” and we’re already tripping.

Am I Wrong? Great!

Anyway, if you’d like to run some numbers on your own, I’ve included the folders I’ve examined over at the NVLabs Google Drive. If you do a count on other folders, let me know. It’s unlikely that there is a treasure trove of multiculturalism in a single folder that would offset these numbers, but who knows?

You can reach out to me on Twitter if you want to weigh in, share your own research, or call me out for something I’ve done wrong. This is the result of a late night curiosity, not peer-reviewed or academic work, but it’s interesting nonetheless.

Reference Data

Black Women Data:

Set 00000: 29/999

Set 01000: 29/999

Set 02000: 20/999

Set 03000: 24/999

White Women Data:

Set 56000: 285/999

Set 18000: 253/999

Set 43000: 302/999

Set 22000: 312/999

Images Counted Toward Black Women Sample:

00112

00183

00195

00269

00302

00304

00337

00351

00375

00384

00394

00418

00451

00453

00468

00473

00560

00635

00663

00704

00706

00737

00759

00769

00785

00793

00853

00942

00984

00989

01012

01031

01051

01059

01064

01069

01074

01103

01104

01296

01338

01346

01408

01411

01421

01426

01447

01452

01493

01556

01598

01601

01626

01676

01753

01770

01869

01919

01953

02017

02158

02160

02167

02169

02224

02269

02332

02333

02366

02387

02438

02561

02681

02728

02841

02888

02896

02936

02994

03614

03005

03028

03032

03040

03046

03052

03087

03137

03206

03220

03238

03252

03279

03353

03380

03442

03614

03628

03692

03738

03788

03795

03817

03978